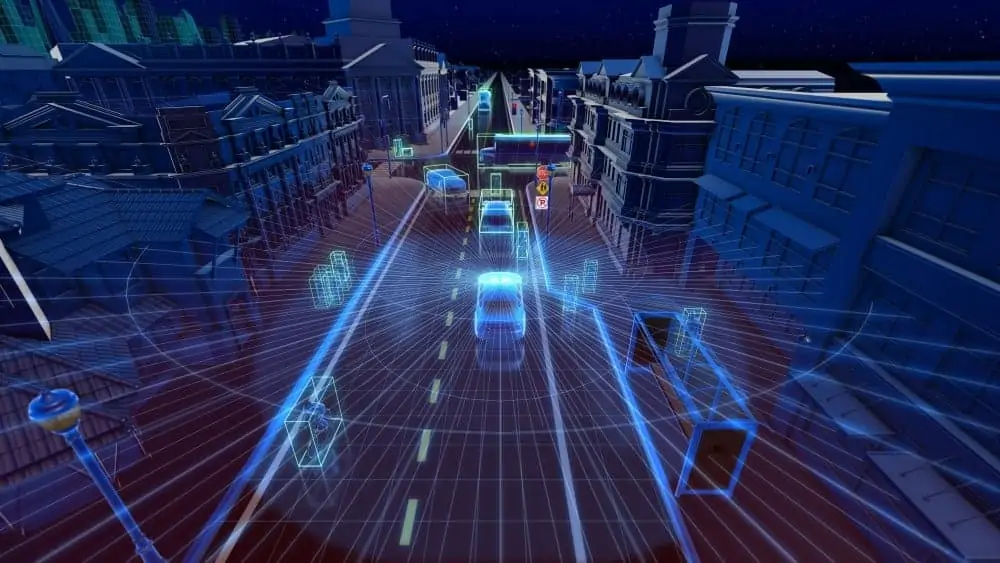

Velodyne Lidar has announced that its surround-view lidar solutions for collecting rich perception data in testing and validation are available on the NVIDIA DRIVE autonomous driving platform – allowing full, 360-degree perception in real time, facilitating highly accurate localization and path-planning capabilities.

Velodyne sensors’ characteristics are also available on NVIDIA DRIVE Constellation, an open, scalable simulation platform that enables large-scale, bit-accurate hardware-in-the-loop testing of autonomous vehicles. The solution’s DRIVE Sim software simulates lidar and other sensors, recreating a self-driving car’s inputs with high fidelity in the virtual world.

“Velodyne and NVIDIA are at the forefront delivering the high-resolution sensing and high-performance computing needed for autonomous driving,” said Mike Jellen, president and chief commercial officer of Velodyne Lidar. “As an NVIDIA DRIVE ecosystem partner, our intelligent lidar sensors are foundational to advance vehicle autonomy, safety, and driver assistance systems at leading global manufacturers.”

Find Suppliers of LiDAR Sensors for Autonomous Vehicles

Velodyne provides a broad portfolio of lidar solutions, which spans the full product range required for advanced driver assistance and autonomy by automotive OEMs, truck OEMs, delivery manufacturers, and Tier 1 suppliers. Proven through learning from millions of road miles, Velodyne sensors help determine the safest way to navigate and direct a self-driving vehicle. The addition of Velodyne sensors enhances Level 2+ advanced driver assistance systems (ADAS) features including Automatic Emergency Braking (AEB), Adaptive Cruise Control (ACC), and Lane Keep Assist (LKA).

“Velodyne’s lidar sensors help deliver the intelligence to enable automated driving systems and roadway safety by detecting more objects and presenting vehicles with more in-depth views of their surrounding environments,” said Glenn Schuster, senior director of sensor ecosystem development at NVIDIA.