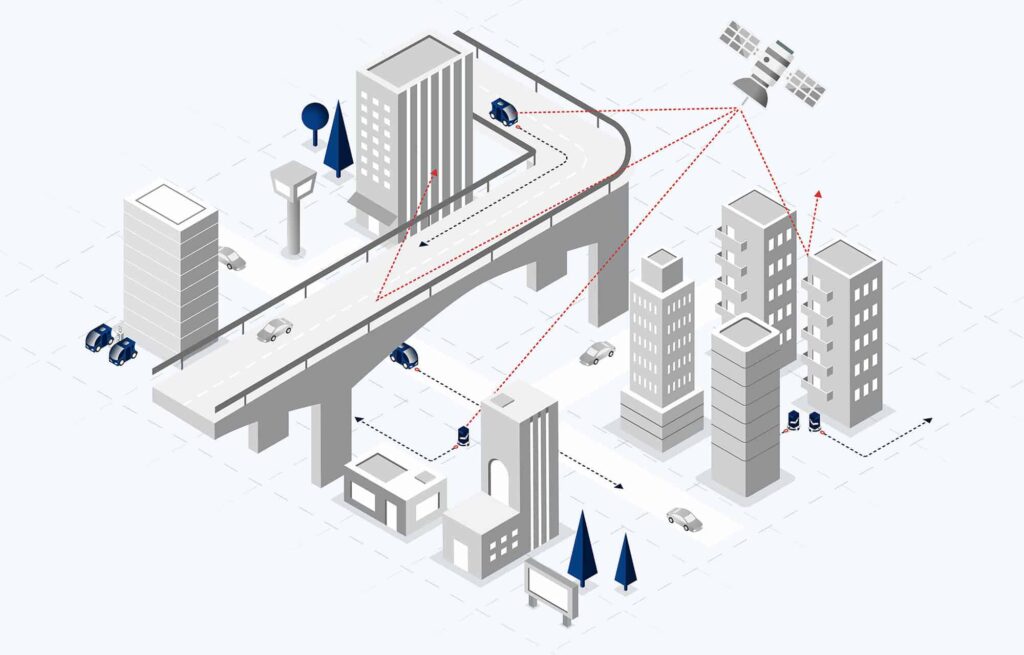

Fixposition, a leading developer of autonomous guidance sensors with high-precision positioning, has partnered with Unmanned Systems Technology (“UST”) to demonstrate their expertise in this field. The ‘Gold’ profile highlights how the company’s technology, which combines high precision RTK-GNSS with deeply fused inertial and visual sensors, can be used to provide precise global positioning for autonomous vehicles anytime and anywhere.

The Vision-RTK 2 is a lightweight, compact off-the-shelf system that can be easily integrated into a wide range of autonomous vehicles and platforms.

The Vision-RTK 2 is a lightweight, compact off-the-shelf system that can be easily integrated into a wide range of autonomous vehicles and platforms.

Featuring industry-standard connectors, it provides plug-and-play autonomy for logistics, landscaping, urban delivery, land mowers and more, allowing you to simplify development and reduce time-to-market. The solution is available in a weatherproof enclosure or as an OEM board, and includes an intuitive web-based interface for setup and monitoring, with a dashboard that provides visualization of your data.

The Vision-RTK 2 system feeds all available sensor data into Fixposition’s deep sensor fusion engine, combining the best of GNSS and relative positioning to overcome weaknesses in individual sensors and remove the time-dependent drift characteristics present in IMU-based solutions. The result is robust and precise positioning even in GNSS-degraded or denied areas.

The Vision-RTK 2 system feeds all available sensor data into Fixposition’s deep sensor fusion engine, combining the best of GNSS and relative positioning to overcome weaknesses in individual sensors and remove the time-dependent drift characteristics present in IMU-based solutions. The result is robust and precise positioning even in GNSS-degraded or denied areas.

Two dual-band receivers use satellite signals from all four GNSS Systems (GPS, GLONASS, BeiDou, and Galileo) to determine the sensor’s absolute position and orientation. RTK technology is used to correct errors and achieve centimeter-level accurate positioning. NTRIP is used to provide the correction data to the sensor. This data can be obtained from publicly available Virtual Reference Station (VRS) networks, or from a local physical base station.

Camera images are used to extract significant points (visual features) that are tracked across multiple images. Subsequent observations of visual features allow computation of how the camera moved in between the captured images.

Camera images are used to extract significant points (visual features) that are tracked across multiple images. Subsequent observations of visual features allow computation of how the camera moved in between the captured images.

Vision-RTK 2 is ideal for a wide range of robotics applications, including delivery, precision agriculture, and landscaping. Fixposition can work with you to determine the specifics of your hardware and software platforms and the unique requirements of your application, fine-tune your design to optimize performance, and continue to support you during the production phase.

To find out more about Fixposition and their precise positioning solutions for autonomous vehicles, please visit their profile page: https://www.unmannedsystemstechnology.com/company/fixposition/