VT MAK has announced the release of version 1.3 of the company’s VR-Engage multi-role virtual simulator, which allows users to act as the pilot or sensor operator of a wide variety of unmanned aerial vehicles (UAVs) as well as manned aircraft. VR-Engage 1.3 adds significant new capabilities, including a brand-new Sensor Operator role, new virtual and augmented/mixed-reality capabilities, enhanced voice-over-DIS/HLA radios, and significant new terrain and content capabilities.

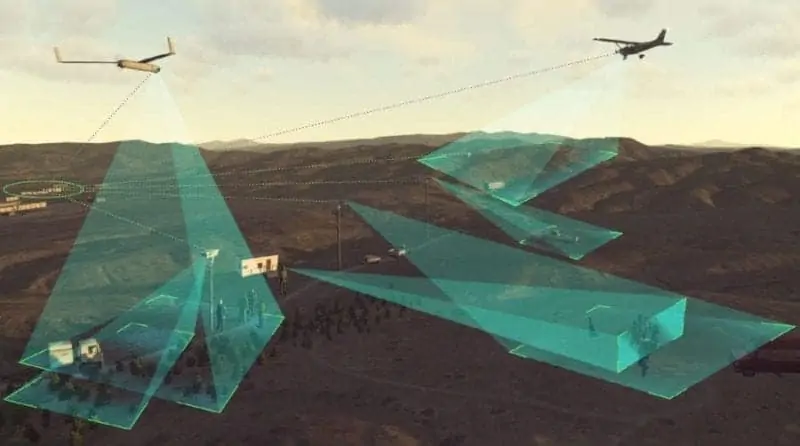

VR-Engage’s new Sensor Operator role allows users to perform common surveillance and reconnaissance tasks by controlling simulated UAV cameras, gimbaled sensors, targeting pods, and fixed security cameras. Steer, zoom, and track targets using standard joysticks and game controllers, or configure VR-Engage for use with custom sensor-specific hand controller devices. The new capability provides users with the ability to:

- Attach a gimbaled sensor to any DIS or HLA entity, such as a UAV, ship, or manned aircraft – even if the entity is simulated by an external 3rd-party application that was not built to include sensor capabilities

- Take manual control of a sensor that has been configured on a VR-Forces CGF entity

- Execute a multi-crew aircraft simulation – using one copy of VR-Engage for the pilot to fly the aircraft, and a second for the Sensor Operator to control the targeting pod

- Place fixed or user-controllable remote cameras directly onto the terrain, and stream the resulting simulated video into real security applications or command and control systems using open standards like H.264 or MPEG4

- Deploy a complete simulated UAV Ground Control Station, by using VR-Forces GUI to “pilot” the aircraft by assigning waypoints, routes, and missions; while using VR-Engage’s Sensor Operator capability to control and view the sensor on a second screen

VR-Engage comes with MAK’s built-in CameraFX module which allows users to control blur, noise, gain, color, and many other camera or sensor post-processing effects. The optional SensorFX add-on can be used to increase the fidelity of an IR scene – SensorFX models the physics of light and its response to various materials and the environment, as well as the dynamic thermal response of engines, wheels, smokestacks, and more. VR-Engage also supports customizable information overlays that can be displayed on top of the camera or sensor view.

VR-Engage’s virtual-reality capabilities now include the following significant enhancements:

- Support for wide variety of VR devices, including the HTC VIVE, VIVE Pro, Oculus Rift, as well as other devices that support the OpenVR standard

- Support for hand controllers for both HTC VIVE and Oculus rift – allowing a user to point and aim the virtual gun by physically moving their hands, while controlling the character’s movement using the devices’ built-in joypads

- The action menu and other overlays are now projected onto a 3D billboard in VR

- A new high-fidelity 3D fighter aircraft interior model, with real geometry for switches and buttons, and working instruments rendered to the 3D panels – for a truly immersive VR flight simulation experience

Virtual reality applications are visually immersive, but generally lack the ability to interact with physical devices, because the user cannot see their hands, or the devices they need to interact with. To overcome this limitation, VT MAK has introduced Augmented/Mixed Reality support in VR-Engage 1.3.

VR-Engage can receive real-time mono or stereo video feeds of the real world from forward-facing cameras mounted on a head-mounted display, and can combine real and virtual elements into a unified mixed-reality scene projected onto the HMD’s stereo displays. Automatic masking of desired real-world elements such as hands and body, touchscreens, and real devices such as throttle/stick and pedals or instrumented guns is achieved through a combination of green-screen (chroma key) techniques and geometry masks. VR-Engage has been tested and demonstrated with the HTC VIVE’s built-in mono camera, the VIVE Pro’s built-in stereo cameras, and with the higher-fidelity Zed Mini stereo cameras – which can be mounted on any vendor’s HMD.

VT MAK has enhanced VR-Engage’s built-in voice-communications model and interface (voice over DIS/HLA) – including more flexible configuration of multiple radios and channels, an updated UI, and the ability for an instructor to jam radios directly from the VR-Forces GUI.

VR-Engage 1.3 is built on VR-Forces 4.6.1 and VR-Vantage 2.3.1, so it is able to leverage new terrain, content, and performance improvements that were added in those versions, including:

- Support for CDB terrains

- Advanced procedural terrains techniques, including blending of high-resolution imagery with even-higher-resolution micro-detail based on land use data

- Improved performance through the use of Indirect Rendering with Bindless Textures

- Increased visual quality through Physically-based Rendering (PBR), with support for metal maps and Fresnel effects

In addition, VR-Engage now comes with several updated ground vehicle models with 3D interiors, new DI-Guy characters, and access to MAK’s latest high-fidelity desert village terrain – based on the “Kilo2” MOUT site at the Camp Pendleton Marine Corps base. Along with this new terrain, VT MAK now provide a new demo scenario that shows off many of the capabilities of VR-Engage and VR-Forces.